Introduction to Deep Q-Learning

In the realm of artificial intelligence, there’s a fascinating intersection between machine learning and decision-making algorithms known as reinforcement learning. At the heart of this intersection lies a powerful concept called Q-learning, which is geared towards enabling AI agents to learn optimal strategies for specific tasks. As tasks become more complex, traditional Q-learning faces limitations that a revolutionary approach called Deep Q-Learning (DQL) seeks to overcome.

The Basics of Reinforcement Learning

Reinforcement learning is a paradigm of machine learning where an agent interacts with an environment to maximize a cumulative reward. It involves learning through trial and error, akin to how humans learn from experiences. Central to reinforcement learning is the concept of Q-values, which represent the expected cumulative reward an agent can achieve by taking a specific action in a given state.

Evolution of Q-Learning

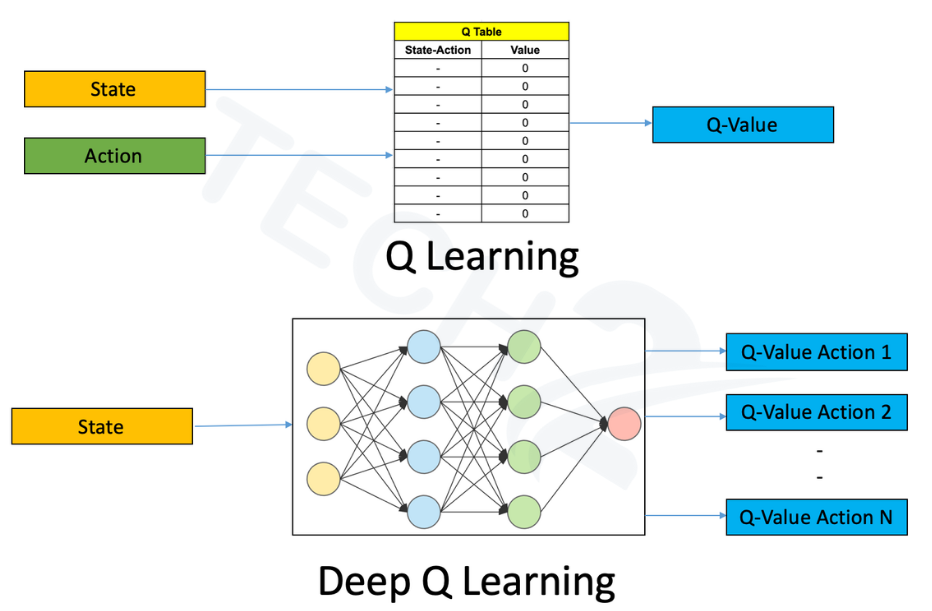

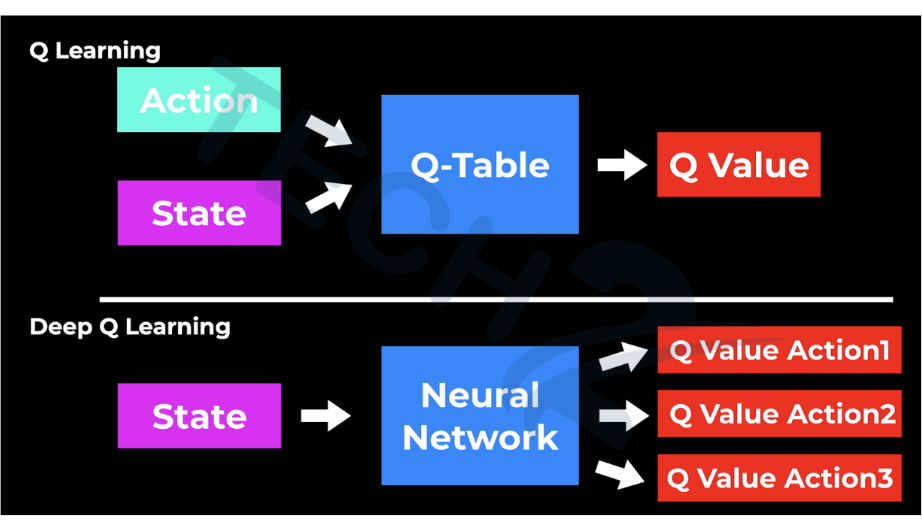

While traditional Q-learning was groundbreaking, it had its constraints, particularly in handling complex and high-dimensional environments. Enter deep neural networks, a breakthrough technology in AI. This marriage of Q-learning and deep neural networks marked the inception of Deep Q-Learning, a paradigm shift in the field of reinforcement learning.

Deep Q-Learning in Action

At the core of Deep Q-Learning is the Q-network, a deep neural network that approximates the Q-values. This network takes the current state as input and outputs Q-values for each possible action. To enhance learning stability and prevent overfitting, experience replay is employed. This technique involves storing past experiences in a replay memory and randomly sampling from it to train the Q-network.

Overcoming Challenges with Deep Q-Learning

Deep Q-Learning brings forth new challenges. One prominent issue is the instability of learning, where the Q-values can oscillate or diverge during training. Techniques like target networks, where a separate network is used to estimate target Q-values, help stabilize the learning process. Another challenge is the trade-off between speed and stability, as training deep neural networks can be computationally intensive.

Real-World Applications

Deep Q-Learning has demonstrated remarkable success across various domains. In the gaming world, it achieved human-level performance in classic Atari games, showcasing its capacity to master diverse challenges. In the realm of autonomous vehicles, Deep Q-Learning aids in navigating complex environments by learning optimal driving strategies. Robotics benefits from DQL by enhancing decision-making capabilities and enabling robots to interact seamlessly with their surroundings.

A like blog – Conch AI

Potential and Future Developments

Research in Deep Q-Learning is ongoing, with efforts focused on addressing its limitations and expanding its applicability. Hybrid approaches that combine DQL with other AI techniques like imitation learning or hierarchical reinforcement learning hold promise for tackling even more complex problems. As AI continues to evolve, Deep Q-Learning remains a pivotal milestone in the journey towards artificial intelligence that learns and adapts like humans.

Conclusion:

Deep Q-Learning has redefined the landscape of reinforcement learning by enabling AI agents to conquer complex challenges. Its integration of Q-learning principles with deep neural networks has paved the way for solving real-world problems across diverse domains. As we look to the future, the impact of Deep Q-Learning on AI technology is undeniable, offering a glimpse into the boundless possibilities of intelligent decision-making.

A like blog – Janitor ai